Vinay Muskan (talk | contribs) |

|||

| Line 177: | Line 177: | ||

| style="background-color:#bababa;" class="" width="111" |MAX Cores User can Use in the Queue | | style="background-color:#bababa;" class="" width="111" |MAX Cores User can Use in the Queue | ||

| style="background-color:#bababa;" class="" width="111" |Max No. of Pending Jobs Per user | | style="background-color:#bababa;" class="" width="111" |Max No. of Pending Jobs Per user | ||

| + | | style="background-color:#bababa;" class="" width="111" |Max No. of Running Jobs Per user | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''1''' |

| − | '''1''' | ||

| − | | width="115"| | + | | width="115" | |

AMD EPYC 7763 | AMD EPYC 7763 | ||

| − | | width="81"| | + | | width="81" | |

50 | 50 | ||

| − | + | | width="147" | | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | width="147"| | ||

Medium | Medium | ||

| − | | width="110"| | + | | width="110" | |

32 | 32 | ||

| − | | width="99"| | + | | width="99" | |

128 | 128 | ||

| − | | width="68"| | + | | width="68" | |

| − | + | 17 | |

| − | | width="92"| | + | | width="92" | |

1 week | 1 week | ||

| − | | width="111"| | + | | width="111" | |

512 | 512 | ||

| − | | width="111"| | + | | width="111" | 2 |

| + | | width="111" | 2 | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''2''' |

| − | ''' | ||

| − | | width="115"| | + | | width="115" | |

AMD EPYC 7543 | AMD EPYC 7543 | ||

| − | | width="81"| | + | | width="81" | |

50 | 50 | ||

| − | | width="147"| | + | | width="147" | |

Small | Small | ||

| − | | width="110"| | + | | width="110" | |

8 | 8 | ||

| − | | width="99"| | + | | width="99" | |

32 | 32 | ||

| − | | width="68"| | + | | width="68" | |

16 | 16 | ||

| − | | width="92"| | + | | width="92" | |

1 week | 1 week | ||

| − | | width="111"| | + | | width="111" | |

| − | + | 128 | |

| − | | width="111"|2 | + | | width="111" |2 |

| + | | width="111" |2 | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''3''' |

| − | ''' | ||

| − | | width="115"| | + | | width="115" | |

AMD EPYC 7543 | AMD EPYC 7543 | ||

| − | | width="81"| | + | | width="81" | |

50 | 50 | ||

| − | | width="147"| | + | | width="147" | |

High_mem | High_mem | ||

| − | | width="110"| | + | | width="110" | |

32 | 32 | ||

| − | | width="99"| | + | | width="99" | |

256 | 256 | ||

| − | | width="68"| | + | | width="68" | |

| − | + | 12 | |

| − | | width="92"| | + | | width="92" | |

2 weeks | 2 weeks | ||

| − | | width="111"| | + | | width="111" | |

| − | + | 1024 | |

| − | | width="111"|2 | + | | width="111" |2 |

| + | | width="111" |2 | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''4''' |

| − | ''' | ||

| − | | width="115"| | + | | width="115" | |

AMD EPYC 7763 | AMD EPYC 7763 | ||

| − | | width="81"| | + | | width="81" | |

50 | 50 | ||

| − | | width="147"| | + | | width="147" | |

Large | Large | ||

| − | | width="110"| | + | | width="110" | |

64 | 64 | ||

| − | | width="99"| | + | | width="99" | |

256 | 256 | ||

| − | | width="68"| | + | | width="68" | |

| − | + | 24 | |

| − | | width="92"| | + | | width="92" | |

2 weeks | 2 weeks | ||

| − | | width="111"| | + | | width="111" | |

1024 | 1024 | ||

| − | | width="111"|2 | + | | width="111" |2 |

| + | | width="111" |2 | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''5''' |

| − | ''' | ||

| − | + | | width="115" | | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | width="115"| | ||

Intel Xeon Platinum 8358 | Intel Xeon Platinum 8358 | ||

| − | | width="81"| | + | | width="81" | |

50 | 50 | ||

| − | | width="147"| | + | | width="147" | |

GPU | GPU | ||

| − | | width="110"| | + | | width="110" | |

16 | 16 | ||

| − | | width="99"| | + | | width="99" | |

16 | 16 | ||

| − | | width="68"| | + | | width="68" | |

2 | 2 | ||

| − | | width="92"| | + | | width="92" | |

1 week | 1 week | ||

| − | | width="111"| | + | | width="111" | |

32 | 32 | ||

| − | | width="111"| | + | | width="111" | |

| + | | width="111" |2 | ||

|- | |- | ||

| − | | width="60"| | + | | width="60" |'''6''' |

| − | ''' | ||

| − | | width="115"| | + | | width="115" | |

AMD EPYC 7763 | AMD EPYC 7763 | ||

| − | | width="81"| | + | | width="81" | |

Low | Low | ||

| − | | width="147"| | + | | width="147" | |

Long | Long | ||

| − | | width="110"| | + | | width="110" | |

32 | 32 | ||

| − | | width="99"| | + | | width="99" | |

1024 | 1024 | ||

| − | | width="68"| | + | | width="68" | |

All lm nodes | All lm nodes | ||

| − | | width="92"| | + | | width="92" | |

1 Month | 1 Month | ||

| − | | width="111"| | + | | width="111" | |

1024 | 1024 | ||

| − | | width="111"| | + | | width="111" | |

| − | + | | width="111" |8 | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | width="111"| | ||

|} | |} | ||

====='''[[Slurm Command Reference:]]'''===== | ====='''[[Slurm Command Reference:]]'''===== | ||

'''[[index.php?title=Media:Linux Command Reference.pdf|Linux_Command_Reference.pdf]]''' | '''[[index.php?title=Media:Linux Command Reference.pdf|Linux_Command_Reference.pdf]]''' | ||

Revision as of 04:15, 13 August 2024

What is HPC?

HPC is specifically needed for these reasons:

- It paves the way for new innovations in science, technology, business and academia.

- It improves processing speeds, which can be critical for many kinds of computing operations, applications and workloads.

- It helps lay the foundation for a reliable, fast IT infrastructure that can store, process and analyze massive amounts of data for various applications.

Specification:

- Total Cores = 8064 (AMD)

- 238 Tflops

- 57 Compute Nodes

- 2 Login Nodes

- 2 Master Nodes

- 12 High memory nodes with local SSD

- Better performance of applications, which require high performance storage but do not scale well across nodes. E.g. Gaussian.

Memory

- 4 GB Per core for Compute Nodes, with a total memory = 29,184 GB

- 16 GB Per core for High Memory Nodes, with total memory = 12,288 GB

Compute Specification:

| COMPUTE NODES | PROCESSOR | RAM | CORE | GPU | NO OF NODES | TOTAL RAM | TOTAL CORES |

| Compute Node | AMD EPYC 7763 | 512 GB | 128 | - | 57 | 29184GB | 7296 |

| High Memory Node | AMD EPYC 7543 | 1024GB | 64 | - | 12 | 12228GB | 768 |

| GPU | INTEL XEON PLATINUM 8358 | 512GB | 64 | Tesla A100*4 | 2 | 1024GB | 128 |

| Total | 42436 | 8192 | |||||

| Storage | 750TB PFS |

How to connect to Snu HPC ?

Enumerated here are the various types.

- SSH : Host magus02.snu.edu.in ( default port 22 )

- From Linux Machine : $ssh [email protected]

- From Windows Machine using putty.

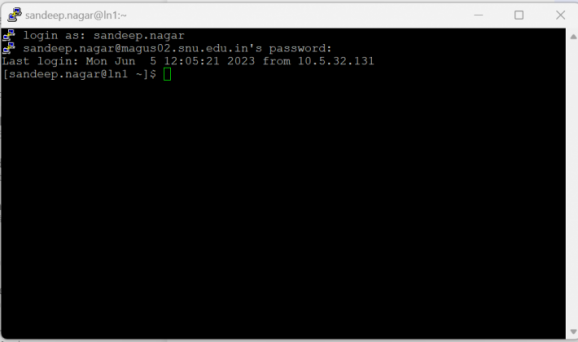

How to Reset Password on Magus02 ?

Step1. Login to magus02 account.

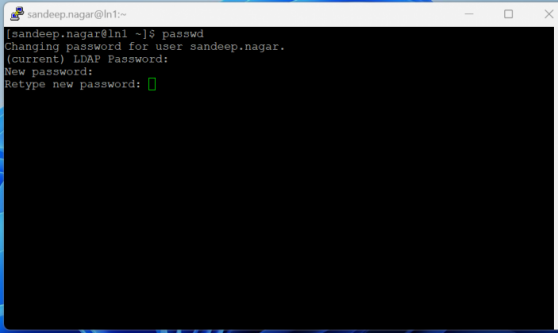

Step2: Use Passwd Command.

How to Connect to Magus from outside SNU Network ?

To gain access to MEGUS from outside the SNU network, a VPN connection is mandatory. To acquire VPN access, kindly click on the VPN Request Form or utilize the option of accessing it by scanning the barcode provided below.

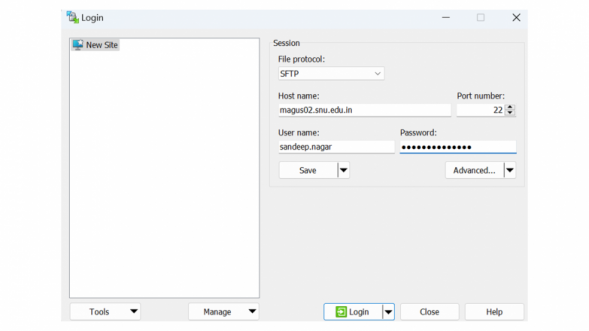

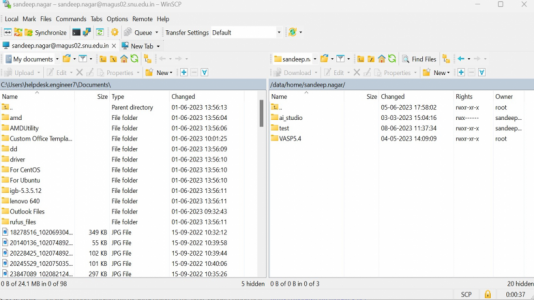

How to Transfers data using WinSCP Windows (Client).

Step1 : Open the WinSCP and fill all the details, After that click on the Login Button.

Step 2 : Click Yes on Warning tab.

Step 3: Connected, Now you can transfer the data using Right click of key Panel.

Applications on Megus

Vasp, Quantum espresso, BigDFT, Gaussian, Siesta, Gromacs, OpenFOAM.

Queue Structure on Magus02.

| S.No. | Processor Architecture | Priority | Queue Name | Min no of Cores Required to submit job | Max no of cores allowed per job | No of nodes in the queue | Wall Time | MAX Cores User can Use in the Queue | Max No. of Pending Jobs Per user | Max No. of Running Jobs Per user |

| 1 |

AMD EPYC 7763 |

50 |

Medium |

32 |

128 |

17 |

1 week |

512 |

2 | 2 |

| 2 |

AMD EPYC 7543 |

50 |

Small |

8 |

32 |

16 |

1 week |

128 |

2 | 2 |

| 3 |

AMD EPYC 7543 |

50 |

High_mem |

32 |

256 |

12 |

2 weeks |

1024 |

2 | 2 |

| 4 |

AMD EPYC 7763 |

50 |

Large |

64 |

256 |

24 |

2 weeks |

1024 |

2 | 2 |

| 5 |

Intel Xeon Platinum 8358 |

50 |

GPU |

16 |

16 |

2 |

1 week |

32 |

2 | |

| 6 |

AMD EPYC 7763 |

Low |

Long |

32 |

1024 |

All lm nodes |

1 Month |

1024 |

8 |